|

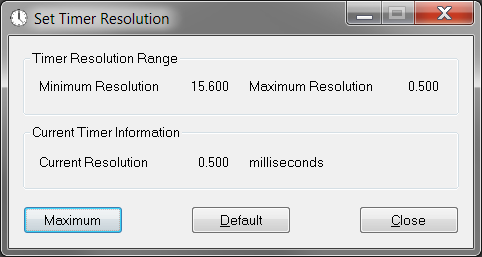

| Seen here, in all its majesty |

This specific very simple app (only 32k in size!) does not affect the game in any way shape or form. In fact, it was originally authored back in 2007, way before King of the Kill. Instead, it tells Windows to check it's timers more often. That's it.

Imagine this. You need to do something in 30 seconds, but you only have a clock with a minute hand. You glance at the clock and it says 4:59 pm. Once it changes to 5:00 pm, has 30 seconds elapsed? Not necessarily! What if you glanced at the clock a mere second before it changed? To ensure that a full 30 seconds has elapsed you would actually have to wait until 5:01 pm to guarantee that at least 30 seconds has passed, but up to 1 minute 59 seconds could have passed!

This is the nature of resolution: how often you can check the clock and it will tell you a different value. Now, computers do things a LOT faster than even once a second. Computers can do things in the nanosecond range (1/1,000,000,000 of a second) or even faster! When I started up the Windows 10 machine that I'm writing this post with, the resolution was 15.625 milliseconds (~0.015 sec). That's WAY slower than 0.000000001 sec! In fact, that resolution will only check the clock 64 times per second, which can be slower than some frame rates that people get when playing King of the Kill.

When we do windows programming, when we set a timer or tell a thread to sleep, we specify values in milliseconds (1/1,000 sec), but if it's only checking the clock every 15.625 milliseconds, a 1 millisecond timer can end up waiting 15.625 milliseconds, which is more than a whole frame in some cases. Obviously we want Windows to check the clock much faster than 15.625 milliseconds.

|

| This is about how often I check my phone. And I always forget to check the time. |

Yes but does it WORK?

At first I thought this tool might have some confirmation bias behind it, but after digging into it, i'm going to say that it's plausible that it has a positive effect on gameplay. Windows has but one internal timer, and it's shared by everything running on the system. When Daybreak titles start up, we tell Windows that we want 1 millisecond resolution on the system timer. But, to be good software citizens, we tell Windows to set the timer resolution back to what it was when the game is ending. Seems reasonable, right?

How does Set Timer Resolution work?

This section is not for the technically faint-at-heart. I'm going to wax programmatically on you. First of all, when the game starts up, we call a documented function called timeBeginPeriod with the minimum value reported by the timeGetDevCaps function (generally 1 [millisecond]). This would probably work fine in many cases as long as our game is the only thing running on the machine. But that is never the case. Little programs that do behind-the-scenes things can start and end and do all sorts of stuff. Browsers can be running with multiple tabs open. Streamer software. Video recording software. Etc. If any of those things can affect the system timer while the game is running, then bad things happen.

It looks like Set Timer Resolution goes even deeper than the multimedia functions (like timeBeginPeriod) that our titles are calling. It goes straight to the kernel, the heart of every operating system. It looks like it's calling some undocumented user-mode kernel functions: NtQueryTimerResolution and NtSetTimerResolution. These are likely called deeper down from the multimedia functions that our titles use.

So where do we go from here?

I'd like to make Set Timer Resolution completely unnecessary. Since the game is already trying to set the timer resolution at startup, it seems like we could be doing a better job of making sure it stays set. I'll evaluate this against our current priorities and talk with the team about getting this in an upcoming hotfix.

|

| Boom. |